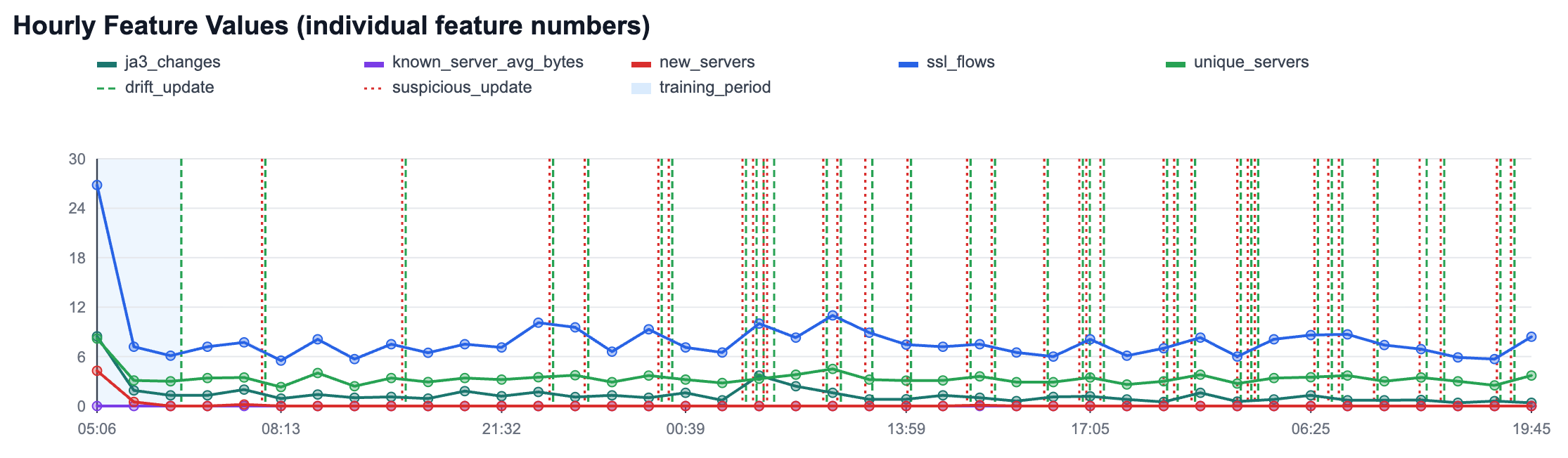

The new HTTPS anomaly detection module in Slips builds per-host adaptive baselines in traffic time, then detects deviations at two levels: per-flow (for bytes to known servers) and per-hour (for host behavior like new servers, unique servers, JA3 changes, and flow volume). It uses online statistics and z-scores for transparent scoring, plus controlled adaptation states (training_fit, drift_update, suspicious_update) to keep learning while reducing poisoning risk.

The result is explainable, operational evidence in clear human text: what changed, confidence, and why it is anomalous.

NetSecGame - A Framework for Training and Evaluating AI Agents in Network Security Environments

We are excited to announce the release of NetSecGame (NSG) v0.1.0, a framework for training and evaluating AI agents in network security environments. Developed at the Stratosphere Laboratory at CTU in Prague, NSG provides a highly configurable testbed for both offensive and defensive security tasks.

What is NetSecGame?

NetSecGame is a simulation environment designed specifically for cybersecurity scenarios. It enables researchers and developers to create rapid, highly configurable experiments where autonomous agents can be trained to perform complex network security operations.

Unlike traditional static datasets or rigid simulations, NSG offers a dynamic playground where:

- Attackers can learn to scan networks, find services, exploit vulnerabilities, and exfiltrate data.

- Defenders can learn to monitor traffic, detect anomalies, block malicious actors, and protect critical assets.

The environment adopts standard Reinforcement Learning (RL) principles to make it intuitive for anyone familiar with the field. It provides a richer game state representation than standard interfaces, allowing for more complex and realistic security interactions.

Why is it Useful?

For security researchers and AI practitioners, reliable evaluation of autonomous agents is a major challenge. NetSecGame solves this by providing:

- Reproducibility: Standardized scenarios ensure that agent performance can be consistently measured and compared.

- Speed: Being a simulation rather than a virtualization-based range, it runs extremely fast, allowing for millions of training steps in a fraction of the time.

- Flexibility: Users can define custom network topologies, services, vulnerabilities, and goals using simple YAML configurations.

- Realistic Noise: Includes a stochastic Global Defender (SIEM-like) simulation to provide realistic opposition and noise for attackers, even without a trained opponent.

Running the Game

The simulation runs as a game server, where agents connect remotely to interact with the environment. This separation allows for flexibility in how and where agents are deployed.

Getting started with NetSecGame is designed to be straightforward. The easiest way to run the NetSecGame server is via Docker:

docker pull stratosphereips/netsecgame

docker run -d --rm --name nsg-server \

-v $(pwd)/<scenarion-configuration>.yaml:/netsecgame/netsecenv_conf.yaml \

-v $(pwd)/logs:/netsecgame/logs \

-p 9000:9000 stratosphereips/netsecgameTo run a specific scenario, you simply pass a Task Configuration file to the server (mapped via volumes), defining the network layout and objectives. You can find some examples in the examples directory.

For those who prefer local development or would like to modify the environment, you can clone the NetSecGame repository and install it from source:

cd NetSecGame

pip install -e .[server]

To run the server locally:

python3 -m netsecgame.game.worlds.NetSecGame \

--task_config=./examples/example_task_configuration.yaml \

--game_port=9000Creating Agents

To start building agents, you can install the package directly via pip:

pip install netsecgameWe provide a companion repository, NetSecGameAgents, which contains reference implementations for several Random, Tabular, and LLM-based agents as well as other building blocks of the simulation and helper functions for the agents.

Minimal example of extension of the Base Agent

from netsecgame import BaseAgent, Action, GameState, Observation, AgentRole

class MyAgent(BaseAgent):

def __init__(self, host, port, role: str):

super().__init__(host, port, role)

def choose_action(self, observation: Observation) -> Action:

# Your logic here to select the best action based on observation.state

...

def main():

# Connect to the game server

agent = MyAgent(host="localhost", port=9000, role=AgentRole.Attacker)

# register agent and get initial observation

observation = agent.register()

# Main interaction loop

while not observation.end:

# select action to play

action = agent.choose_action(observation)

# submit it to the server and get new observation

observation = agent.make_step(action)

# disconnect from the game

agent.terminate_connection()Manual Play

We also provide an interactive TUI agent that allows you to play the game manually! This is a great way to understand the environment dynamics and test different strategies yourself.

To run the interactive agent:

python3 -m agents.attackers.interactive_tui.interactive_tuiwhich starts a textual interface for the agent:

What's Coming Next

We are continuously improving NetSecGame to push the boundaries of AI in cybersecurity. Our roadmap includes:

- Expanded Scenario Library: We are working on a wider range of pre-built complex topologies to test agent generalization.

- Advanced Agents: New reference implementations for state-of-the-art RL and hierarchical agents are in the pipeline.

- Enhanced Dynamics: Future updates will include more granular network actions and deeper integration with realistic network traffic generation.

- Community Competitions: We plan to host challenges where users can pit their best attacker/defender agents against each other!

(Note to authors: Please add any specific internal roadmap items here if applicable)

Learn More

- GitHub Repository: https://github.com/stratosphereips/game-states-maker

- Documentation: https://stratosphereips.github.io/NetSecGame/ - Comprehensive guides on configuration, agent creation, and architecture.

- PyPi Package: https://pypi.org/project/netsecgame/

- Docker Hub: https://hub.docker.com/r/stratosphereips/netsecgame

Contributing

We invite the community to try out NetSecGame, build agents, and contribute to the future of autonomous network security!

We especially welcome new agent implementations of all kinds: Attackers, Defenders, and Benign agents (simulation of normal user behavior). If you've built an agent you'd like to share:

- Fork the NetSecGameAgents repository.

- Implement your agent inheriting from

BaseAgent. - Submit a Pull Request with your agent code and a brief description of its strategy.

Whether you are a researcher looking for a new benchmark or a developer interested in cybersecurity, we look forward to seeing what you build!

The Attacking Active Directory Game - Can you outsmart the Machine Learning model? Help us by playing the evasion game!

The “Attacking Active Directory Game” is part of a project where our researcher Ondrej Lukas developed a way to create fake Active Directory (AD) users as honey-tokens to detect attacks. His machine learning model was trained in real AD structures and can create a complete new fake user that is strategically placed in the structure of a company.

Machine Learning Leaks and Where to Find Them

Machine learning systems are now ubiquitous and work well in several applications, but it is still relatively unexplored how much information they can leak. This blog post explores the most recent techniques that cause ML models to leak private data, an overview of the most important attacks, and why this type of attacks are possible in the first place.

Creating "Too much noise" in DEFCON AI village CTF challenge

During DEFCON 26 the AI village hosted a jeopardy style CTF with challenges related to AI/ML and security. I thought it would be fun to create a challenge for that and I had an idea that revolved around Denoising Autoencoders (DA). The challenge was named “Too much noise” but unfortunately it was not solved by anyone during the CTF. In this blog I would like to present the idea behind it and how one could go about and solve it.